PROJECT_MODULES

Vision-Language-Action System for Humanoid Manipulation/h3>

Automated pipeline to label 1,607 clips from 72 YouTube videos. Trained 19M-param Temporal Transformer achieving 77-85% accuracy on unseen demos. Hierarchical mapping to robot primitives with YOLO fusion for disambiguation.

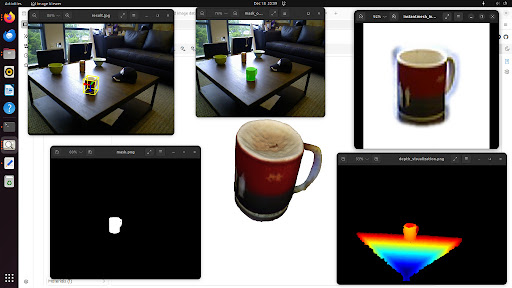

Open-Vocabulary 6D Pose Estimation

Zero-shot 6D tracking architecture with VLM-based semantic inventory. Achieved 76.5-100% ADD-S AUC on YCB-Video.

Dynamic Object Handover System

Vision-based HRI with real-time hand tracking. Robot adapts to human position for seamless object transfer.

Pedestrian-Aware AV Safety System

TTC-driven safety architecture. Fused LiDAR + RGB-D for pedestrian tracking with 90% success rate.

Humanoid Motion Planning

Implemented whole-body locomotion and motion planning for Unitree G1. Enforced ZMP control, MPC balance and RL locomotion for stable manipulation and reaching tasks.

Terrain-Aware Navigation

Developed a perception pipeline converting depth clouds to elevation maps for quadruped footstep placement.

RL Locomotion + Safety

Trained terrain-adaptive locomotion with PPO and integrated CBF as a real-time safety filter. Achieved zero-fall locomotion with 99% unsafe action rejection.

Quadruped Locomotion (RL)

Trained ANYmal quadruped policies using Proximal Policy Optimization. Achieved robust traversal on irregular terrain with 0-fall safety constraints.

VIO + Footstep Planning

Fused Visual-Inertial Odometry with footstep planning. Enabled autonomous navigation in GPS-denied environments with <10cm drift.

EMG-Controlled Prosthetic Arm

Built a 5-DOF prosthetic arm controlled via EMG signals. Deployed real-time gesture classification on ESP32 using XGBoost, achieving 96% accuracy.